Tom Goddard

January 13, 2014

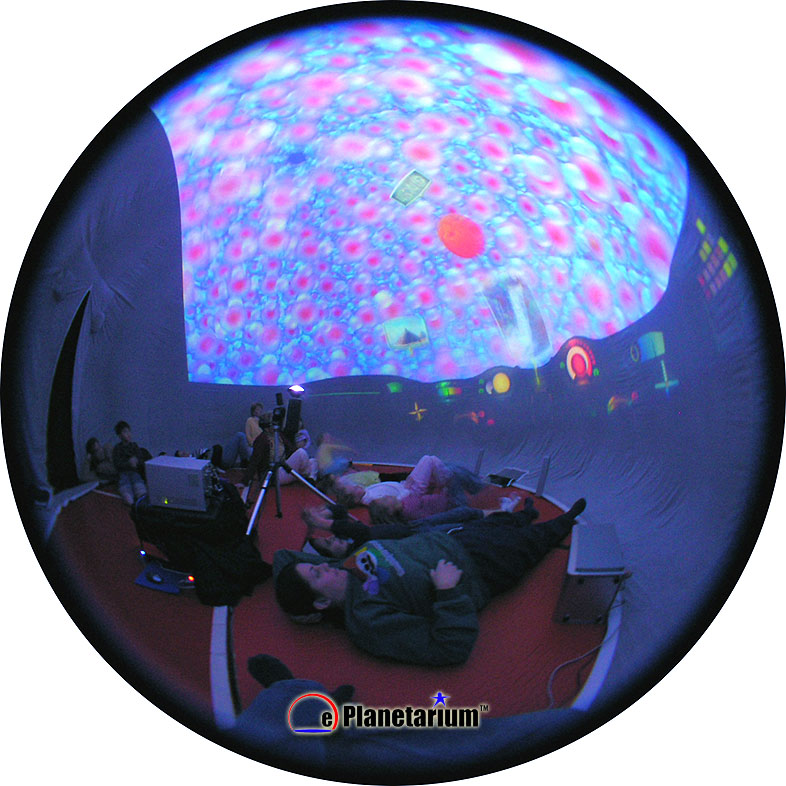

The Biophysical Society Annual Meeting will have an inflatable planetarium dome to show animations. The meeting is in San Francisco, February 15-19. The animations are aimed at high school students as science outreach. Also there are 7000 researchers attending the meeting. Ed Egelman heads the outreach committee and Wah Chiu and Matt Dougherty arrange the dome setup and show. Wah asked us to provide a video this year. Two years ago I made an HIV RNA molecular animation for the first dome show at the BPS meeting.

From the December Biophysical Society newsletter

Sunday, February 16 - Tuesday, February 18, 10:00 am - 5:00 pm

Wednesday, February 19, 9:00 am - 1:00 pm

Sunday, February 16 - Tuesday, February 18, 10:00 am - 5:00 pm

Wednesday, February 19, 9:00 am - 1:00 pm

"Watch cells and viruses come to life in an audio-visual immersion experience! The 3-D Biomolecular Discovery Dome will feature films that will take you inside the exciting world of cells, all while showing how difficult biophysical topics can be made accessible to a broad audience. The Biomolecular Discovery Dome will be open to Meeting attendees daily from Sunday through Wednesday. This portable dome is being sponsored by the Public Affairs Committee for the third consecutive year."

|

|

|

I think the discovery dome used at the meeting comes from the Houston Museum of Natural Science.

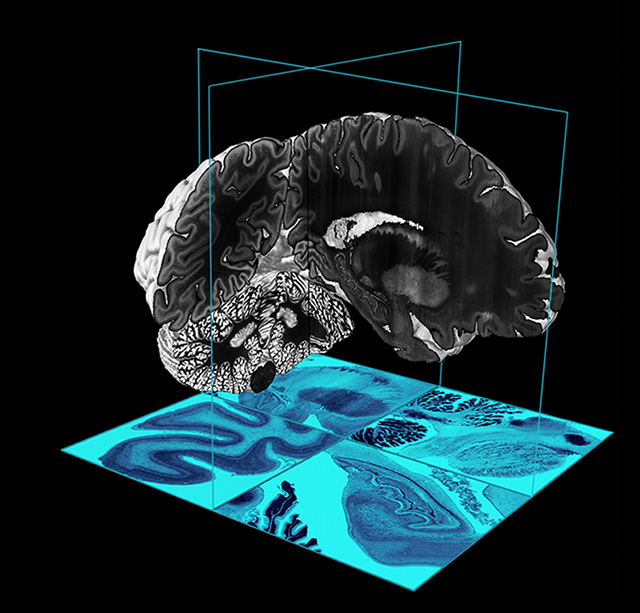

Aim was to make a 3 minute video showing brain images from optical and electron microscopy, traced neurons, and a molecular ion channel at a neural synapse. Will have music and brief narration. It is for the general public that has little exposure to raw science data. Intent is to inspire rather than educate.

Make it in just 1 week of full-time effort. Typical planetarium show production is $10,000 per minute according to Hilo Planetarium director. So a 3 minute piece at that quality level would be about a 3 month effort ($30,000).

After my first draft I listed 35 problems. I fixed almost none of those given the time constraints.

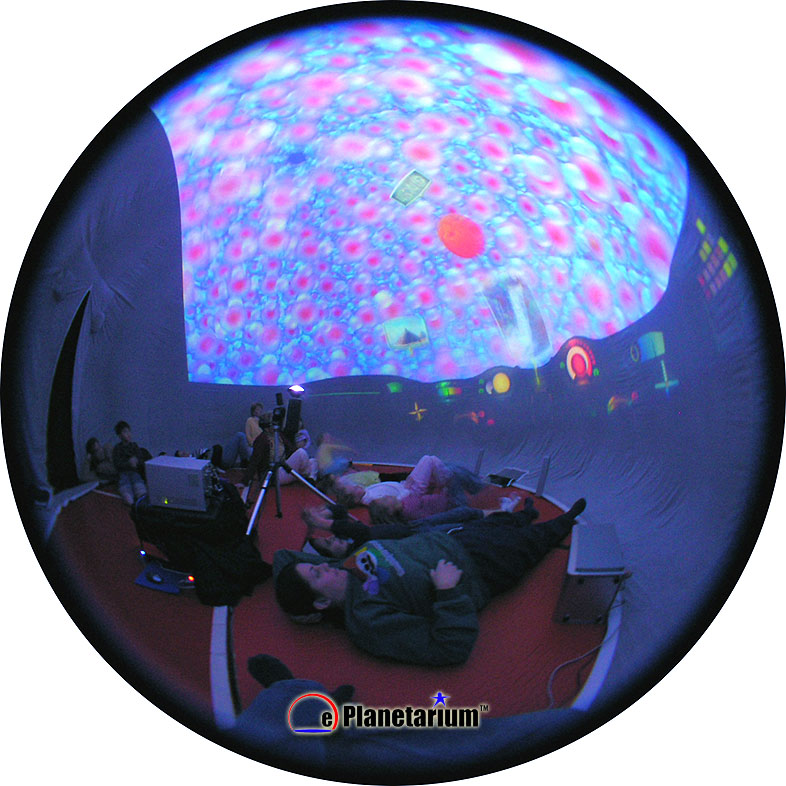

Chimera can make dome projection images that map the dome hemisphere to a circle with radius corresponding to angle from the zenith in the dome. This camera mode was added by Greg Couch a few years ago.

These images get warped so that a projector with a fish-eye lense or shining on a spherical mirror can display onto a dome ceiling.

Half dome version of my dome brain video on YouTube.

The Big Brain project by the Montreal Neurological Institute at McGill University, published in Science in June 2013 (BigBrain: An Ultrahigh-Resolution 3D Human Brain Model), imaged a whole human brain at the highest resolution to date, 10 by 10 um pixels with 20 um thick slices.

Big brain in Chimera and a demo I showed in the Viz Vault in July to students in the COSMOS summer science program. And another spin-off demo on snake venom neurotoxin.

Matt Dougherty pointed me to the NeuroDome KickStarter project. Neurodome web site.

They are producing a 5 minute planetarium animation of astronomy and brain images about why people are curious, due out in Summer of 2014. They raised $27,000 from small contributions on the KickStarter crowd-funding web site. For donations of $5500, they offered to do an fMRI scan or your brain and tour your brain in the animation -- no takers!

Difficult to get access to a planetarium dome, but stereo goggles with wide field of view and head tracking can let you see in all directions, and in 3d giving a similar immersive experience.

Not a consumer product. Oculus Rift goggles are $300. They are not available to consumers yet (speculated release in late 2014, early 2015), Graham Johnson has a developer version that I made work with Hydra desktop app.

Cell phone technology. The oculus rift is essentially a cell phone in a pair of ski goggles with a lens for each eye to magnify each half of the single lcd flat-panel. It has standard cell phone accelerometer (measure gravity, down direction), magnetometer (measure north direction), and gyroscope (track rotations). It has a cord for the video feed from a computer.

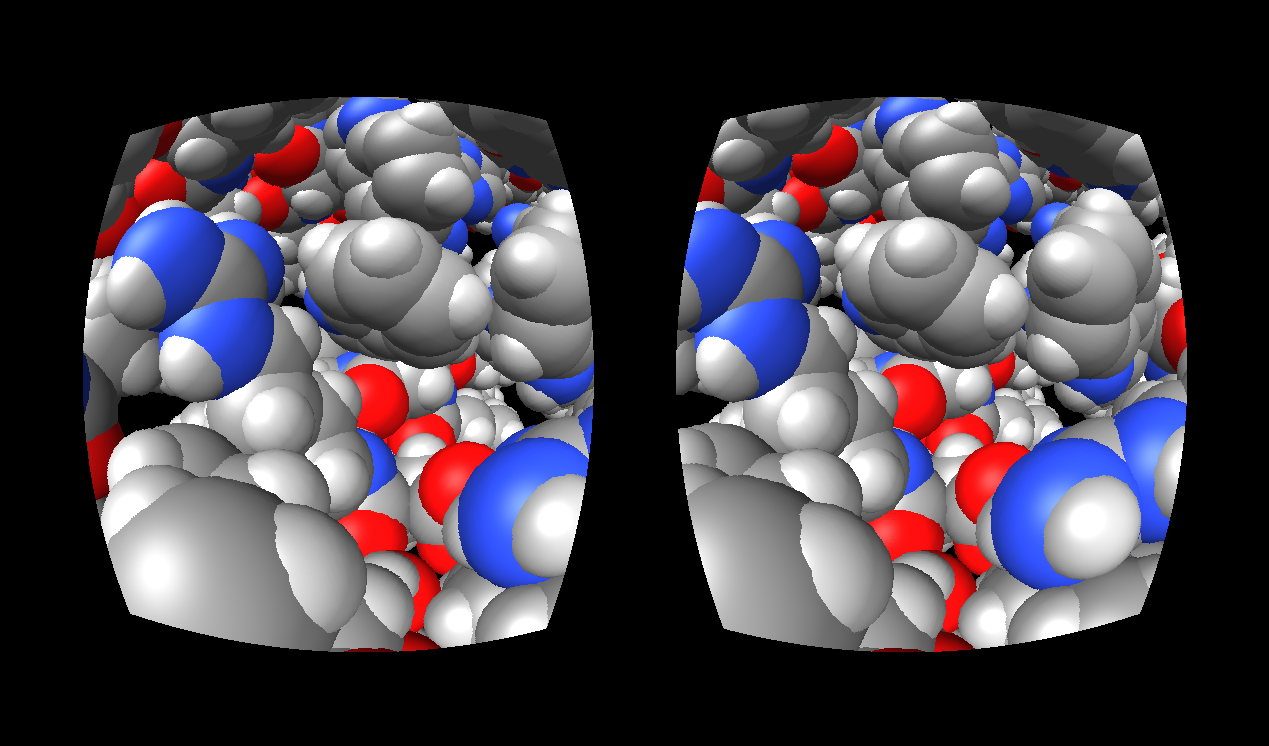

Needs device support in application. Programming to use the Oculus requires reading the view direction at every frame and updating side-by-side stereo images (one left eye, one right eye). Each eye image needs to be corrected for lens spherical distortion using a GPU shader program built into your application as a post-processing rendering step.

Limited resolution. Resolution is low, 1280 x 800 total, so 640 x 800 pixels for each eye. Each eye image covers 90 degree field of view. A desktop display typically spans a 30 degree at normal viewing distances, so the Oculus resolution is similar to a desktop display with 210 by 270 pixels. It is a bit better than that because the lenses give smaller pixels in the middle of the field and bigger ones near the edge.

Head tracking latency. As your head moves an object will move on the display so it appears stationary in space. Because of the delay (latency) detecting head motion, objects don't stay in place during a head motion. Oculus SDK emphasizes keeping latency below 40 milliseconds. At 200 degree/second head rotation this cause a 5 degree jump in object motion when you turn your head -- quite noticable. Spoils the virtual world illusion somewhat.

|

|

| Hydra with stereo oculus images, no lens correction. | Hydra full-screen with lens correction |

Getting Big Brain data -- can only download one full-resolution slice at a time through the web site. Use wget, with authentication cookies. A dozen Chimera bugs and limitations to overcome. Shadows on channel, blocky, show images of good and bad. Graphics rendering errors on 3 of 6000 frames at 4k resolution. How music and narration is added to movie with Audacity and (old) Quicktime Pro 7.