VR with Multimodal Interactions

MEinVR: Multimodal Interaction Paradigms in Immersive Exploration. Yuan, ZY; Liu, Y and Yu, LY. 21st IEEE International Symposium on Mixed and Augmented Reality (ISMAR) Adjunct 2022, pp.85-90. [PDF]

- multimodal: using multiple modalities of user input, in this case,

hand controller + voice;

others include gestures (w/o controllers), head or foot postures, gaze

- combining imprecise inputs of multiple modalities allows getting

a precise result

- imprecise spatial and verbal inputs are more like natural human interactions than highly precise pointing in 3D or having to speak with a rigid grammar and limited ontology

Some Limitations of VR Hand Controllers

- switching modes (rotation, translation, selection, etc.) is unintuitive

and has a high learning curve:

- if the different modes are accessed with different controller buttons,

the user has to learn/remember which button does what

- “accidents” happen when one forgets which mode is active

or uses the wrong button

- 2D interfaces for mode switching (e.g., icon bar or menu) are not generally recommended in immersive environments; issues include size of the display and occlusion of the scene

- if the different modes are accessed with different controller buttons,

the user has to learn/remember which button does what

- in the immersive environment, precise specification/selection in 3D is more difficult than one might think: judging depth, dealing with occlusion, etc., particularly if the structure is not yet well understood

Natural User Interfaces, Natural Language Interfaces

Existing NLIs for visualization were developed for/in desktop environments. These include:

- Articulate – machine learning-assisted, parses queries into commands

- DataTone – keyboard or voice input, keeps track of user corrections

- Eviza – users can iteratively modify queries

MEinVR builds upon these existing methods for a VR environment, with design goals to:

- minimize user learning costs and cognitive load

- reduce the accuracy requirements of each type of input

- play to the strengths and avoid the weaknesses of each type of input

- voice commands can refer to hidden or occluded parts

- pointing naturally conveys positions that are hard to describe in words alone

- controller movements naturally convey approximate distances and angles

Questions:

- How best to combine controller and speech input?

- Can the combination be used for complex tasks?

- Is the combination more effective than either type of input alone?

Implementation

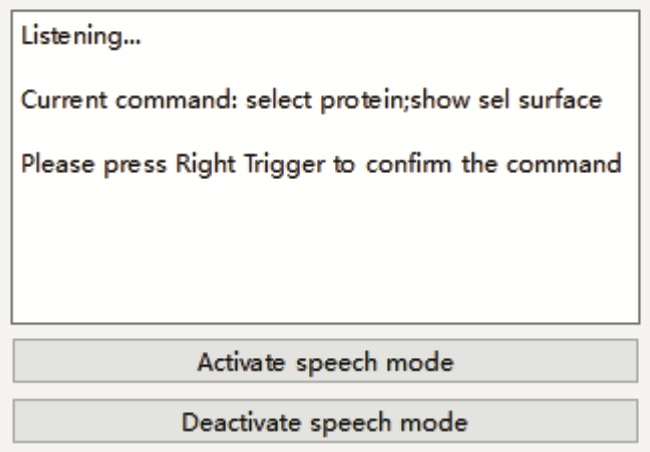

Oculus Quest 2 using ChimeraX VR + speech recognition

- headset microphone captures audio

- Google speech-to-text (STT) converts the audio to text

- the system tries to recognize compound queries and split them accordingly

- Word2vec converts the text to a word vector for comparison with a library of existing commands

- Spacy calculates cosine similarity and returns the most similar command

- in parallel, VR controller in “query mode” tracks real-time position

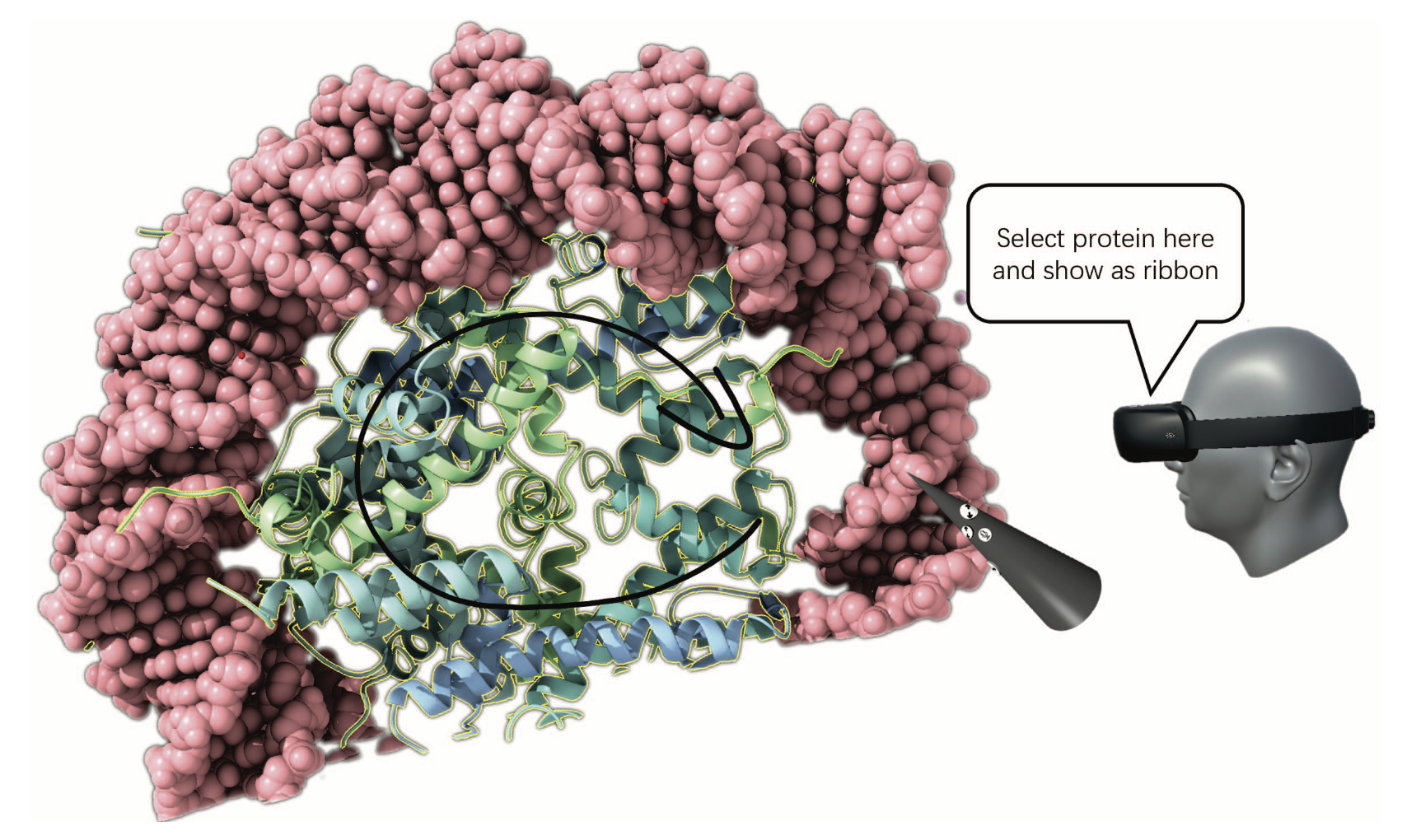

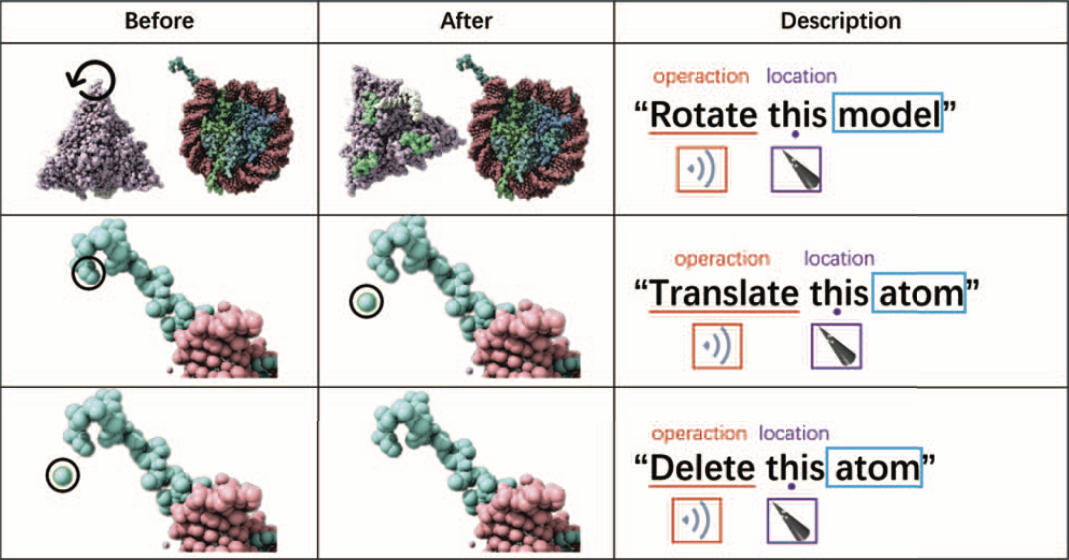

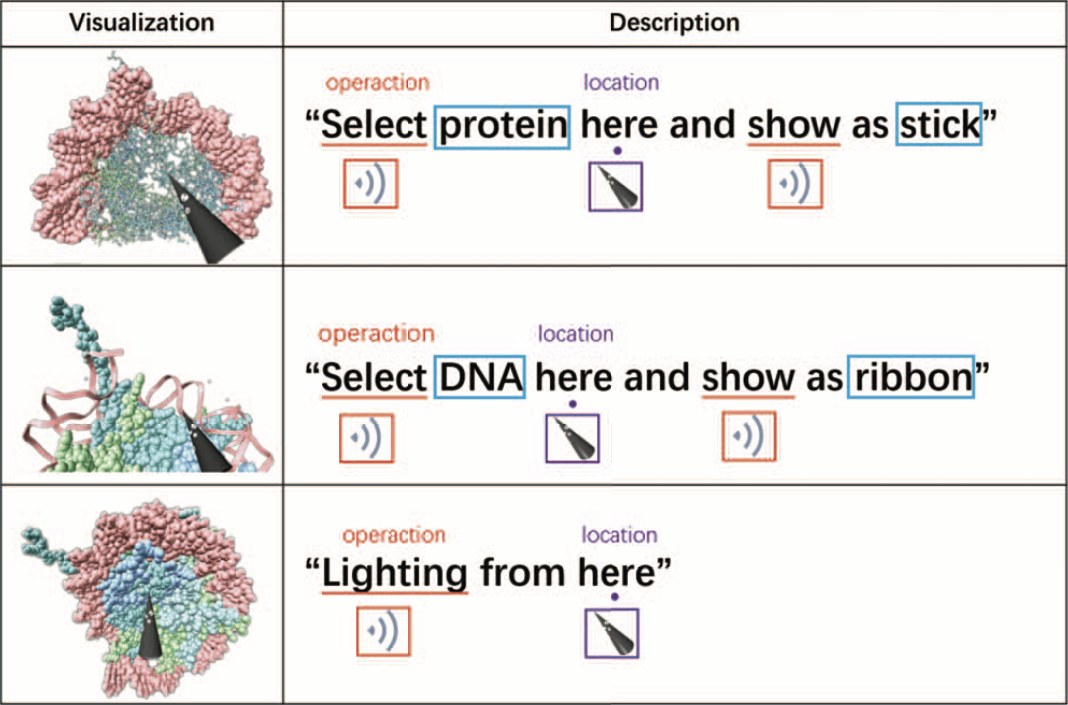

Interface and Simple Task Examples

- would it work to say “this chain” or “this residue”?

- how do atomspec strings relate to their library of commands? (the lefthand figure only shows a generic category, “protein”)

- does controller position-tracking automatically get the atomspec? (like our desktop mouse atomspec balloons)

Complex Task Examples

- if the target (e.g. protein) is not in the circumscribed region, nothing will happen

- does “this protein” expand to a single chain, or all protein in that model, or all protein in all models? (if the latter, the “this” is useless)

- the first two might be better without selection, e.g.,

“Show this protein as stick”

- ...however, their implementation probably requires a separate “select” action (cannot generate an atomspec for the “show” action)

Questions Redux

- How best to combine controller and speech input?

- the jury is out; the authors refer to the current approach as a prototype, but seem pleased with their initial progress

- Can the combination be used for complex tasks?

- some compound tasks are given as examples, but complexity may still be limited

- Is the combination more effective than either type of input alone?

- probably yes for novice users and the queries that their system can handle, but there may be many queries their system cannot handle

The authors basically punt by saying:

“Further studies are required to explore the prominent advantages of

each interaction input in different exploration tasks for various data.

Moreover, a comprehensive user study needs to be conducted to evaluate

the usability and effectiveness of our method in data exploration.”

I also didn't see anything about availability of their code, but the corresponding author's email address is given.