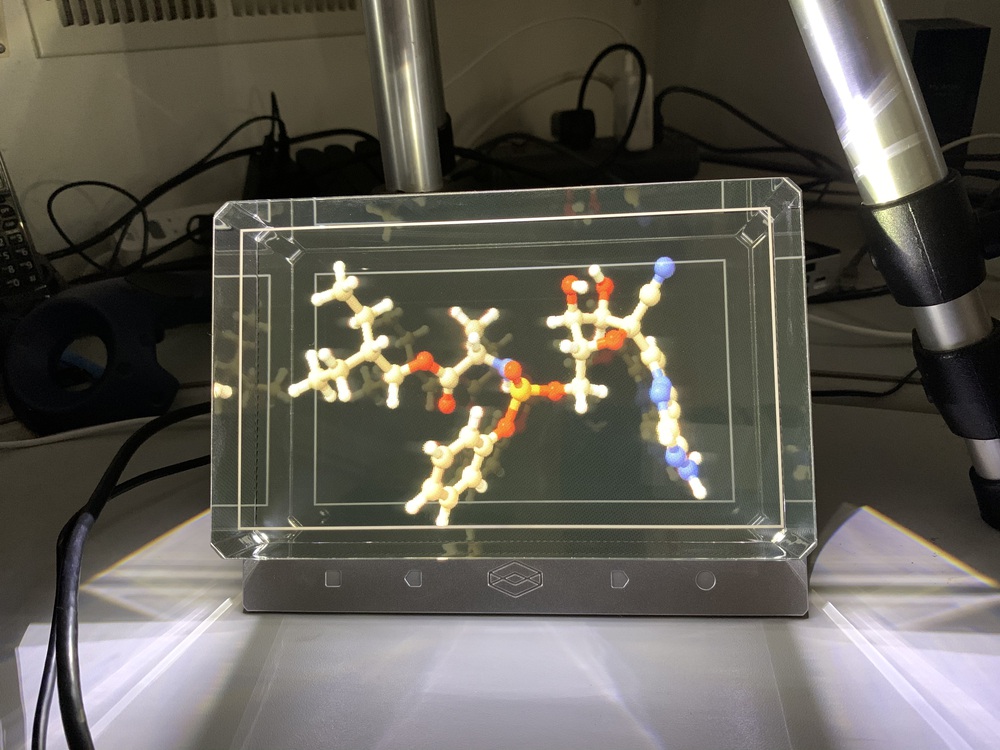

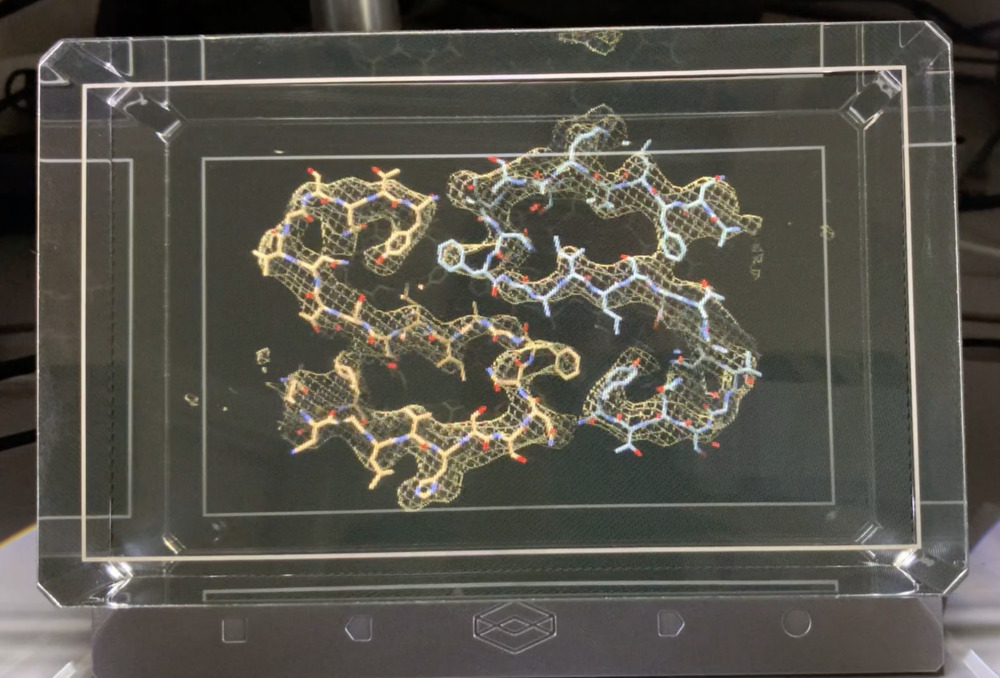

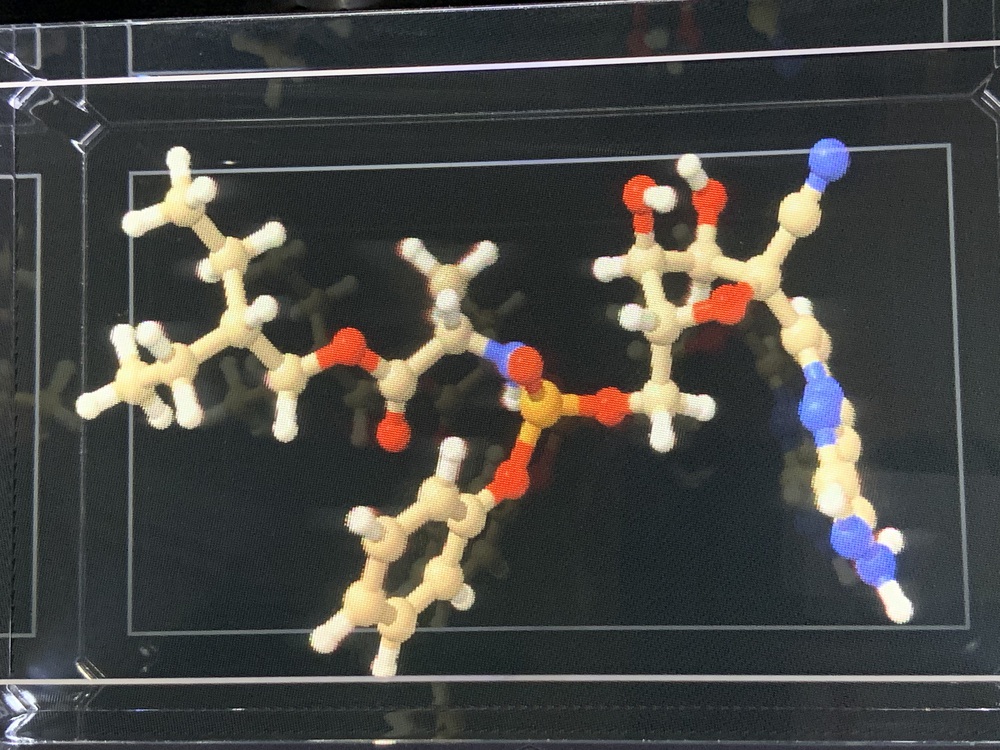

Drug remdesivir shown in 8.9" LookingGlass.

Videos panning and molecular dynamics and hand tracking.

Drug remdesivir shown in 8.9" LookingGlass. Videos panning and molecular dynamics and hand tracking. |

Tom Goddard

July 12, 2020

ChimeraX can display molecules and maps (any 3D model) on LookingGlass 3D displays which require no special glasses. The ChimeraX lookingglass command turns on use of the display:

look on

The HoloPlayService which allows applications to find the LookingGlass display must be running. Tested on macOS 10.15.5, Windows 10, and Linux Ubuntu 18.04 operating systems.

Thanks to Leo Jin of Single Particle LLC for providing the LookingGlass display that allowed adding ChimeraX support. Single Particle builds turn-key computer systems for electron microscopy research and offers 3D solutions including 3DVision and LookingGlass.

LookingGlass displays have limited resolution and depth of field. Objects displayed far in front of or far behind the middle depth will appear blurry. Time-varying data is a possible interesting use case. With time varying data the usual tricks on a conventional display of rocking models side-to-side to see depth works poorly but is not needed on a 3D display.

Some possible uses of the LookingGlass display.

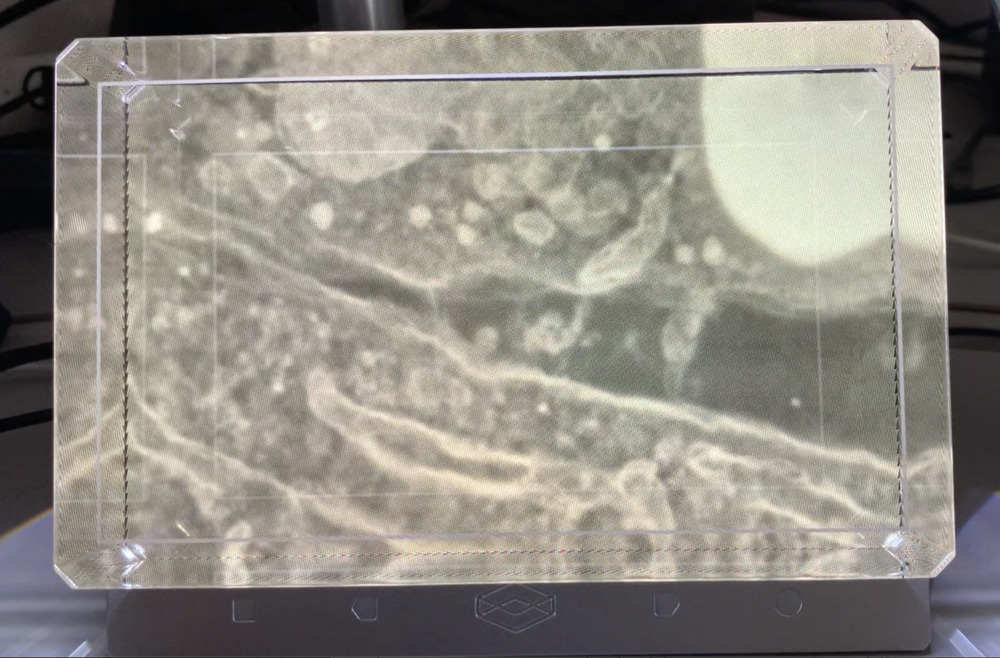

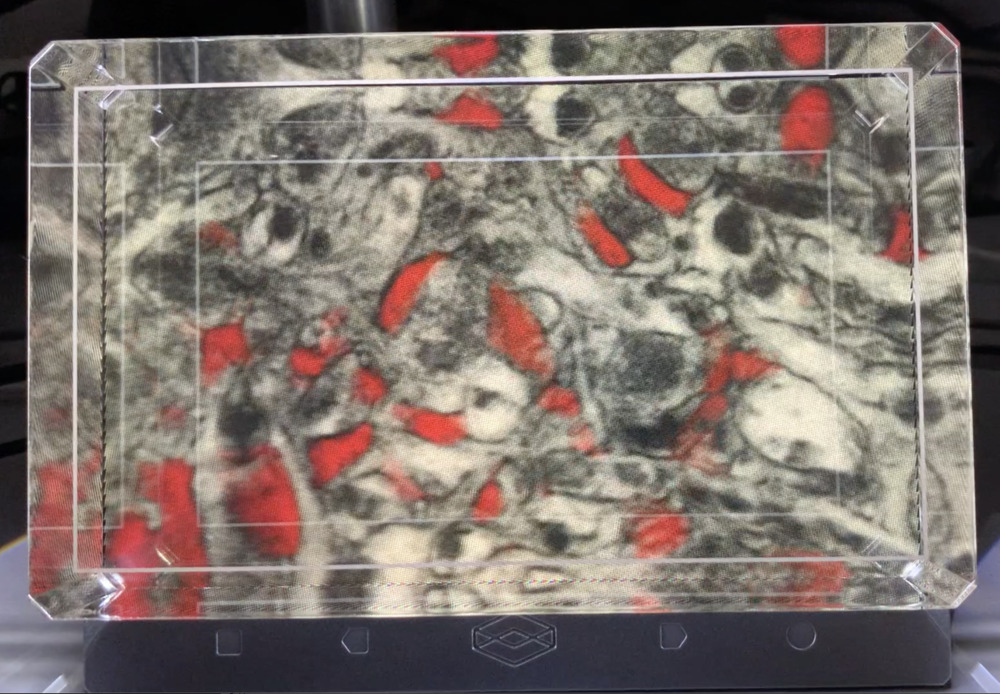

Electron tomogram of immune system T-cell and target cell. Video. |

3D lightsheet microscopy crawling neutrophil in collagen. Video. |

Amyloid fibril implicated in type 2 diabetes and electron microscopy. Video. |

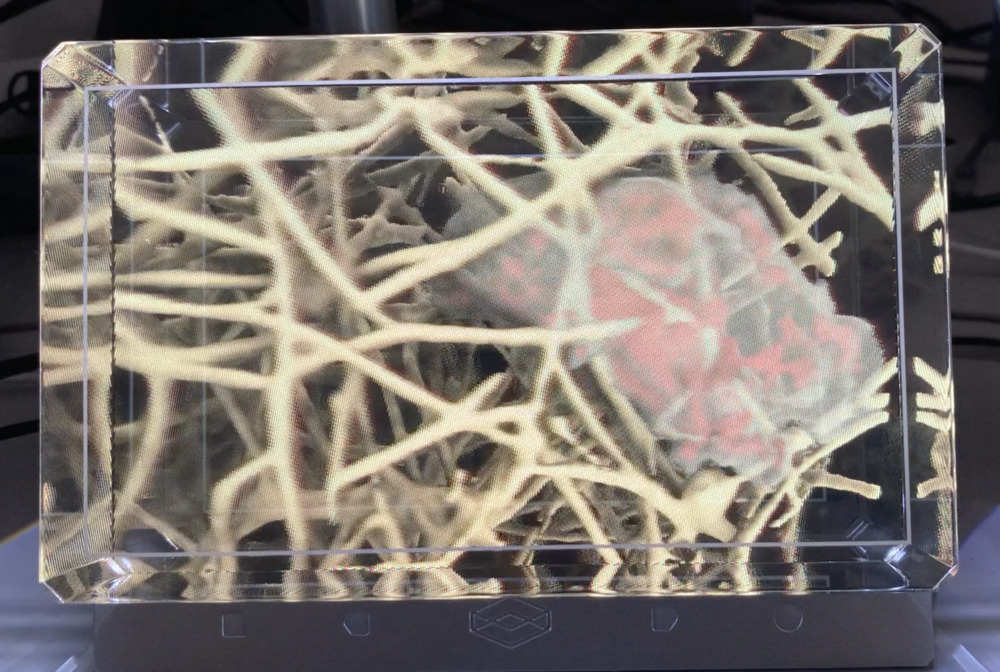

Synapses (red) in mouse brain seen by electron microscopy. Video. |

I have only used the smallest LookingGlass display 8.9" ($600). It connects by HDMI and USB with no separate power connection. LookingGlass Factory also sells a 15.6" model ($3000) and a 32" model (price not published).

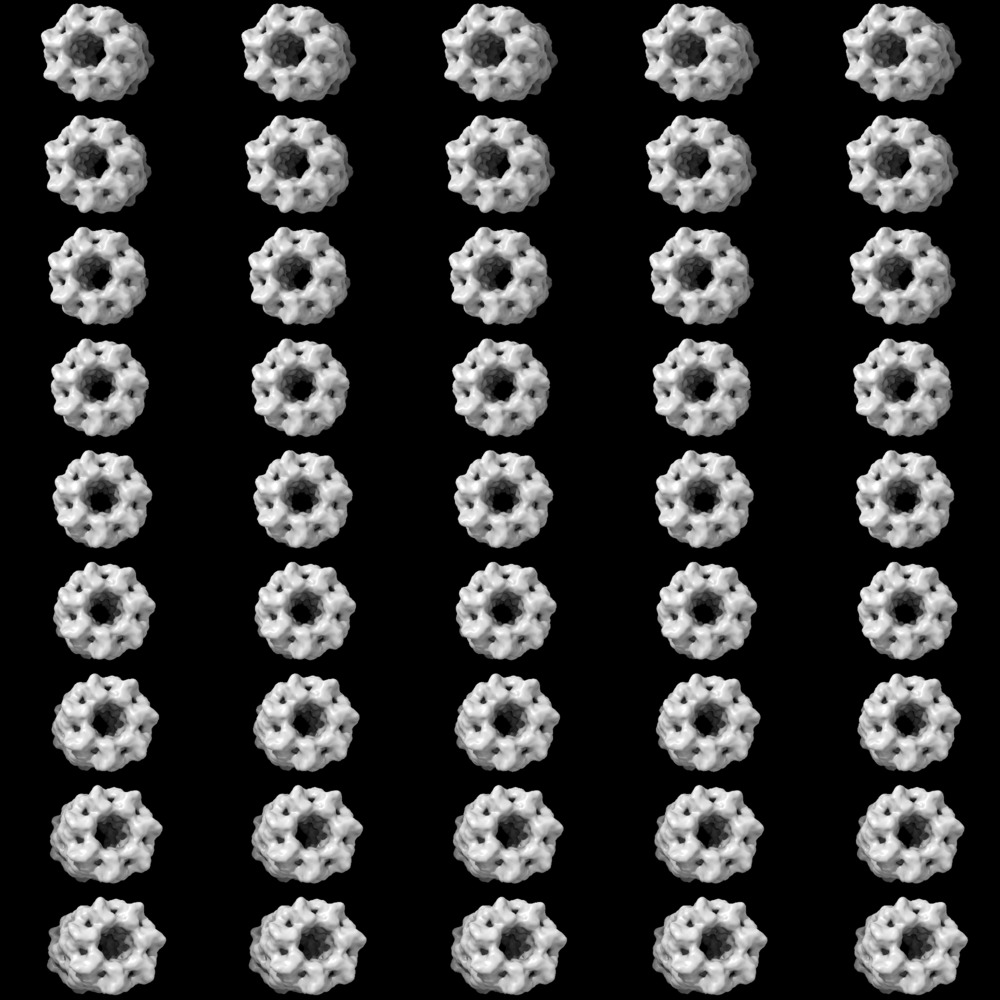

How it works. Lenticular, 45 images, horizontal layout spanning 40 degrees. No vertical viewpoints. ChimeraX renders the 45 viewpoints into a single 4096 x 4096 image called a "quilt" laying them out in 9 rows and 5 columns, lower left image is leftmost viewpoint and upper right image is rightmost view point. Individual views are 819 x 455 pixels (= 4096 / 5, 4096 / 9). More details are described by LookingGlass docs on the camera viewpoints and quilt image. The quilt image is then rendered to the LookingGlass display using a custom OpenGL shader that interleaves the 45 images in tilted vertical strips.

Quilt image. |

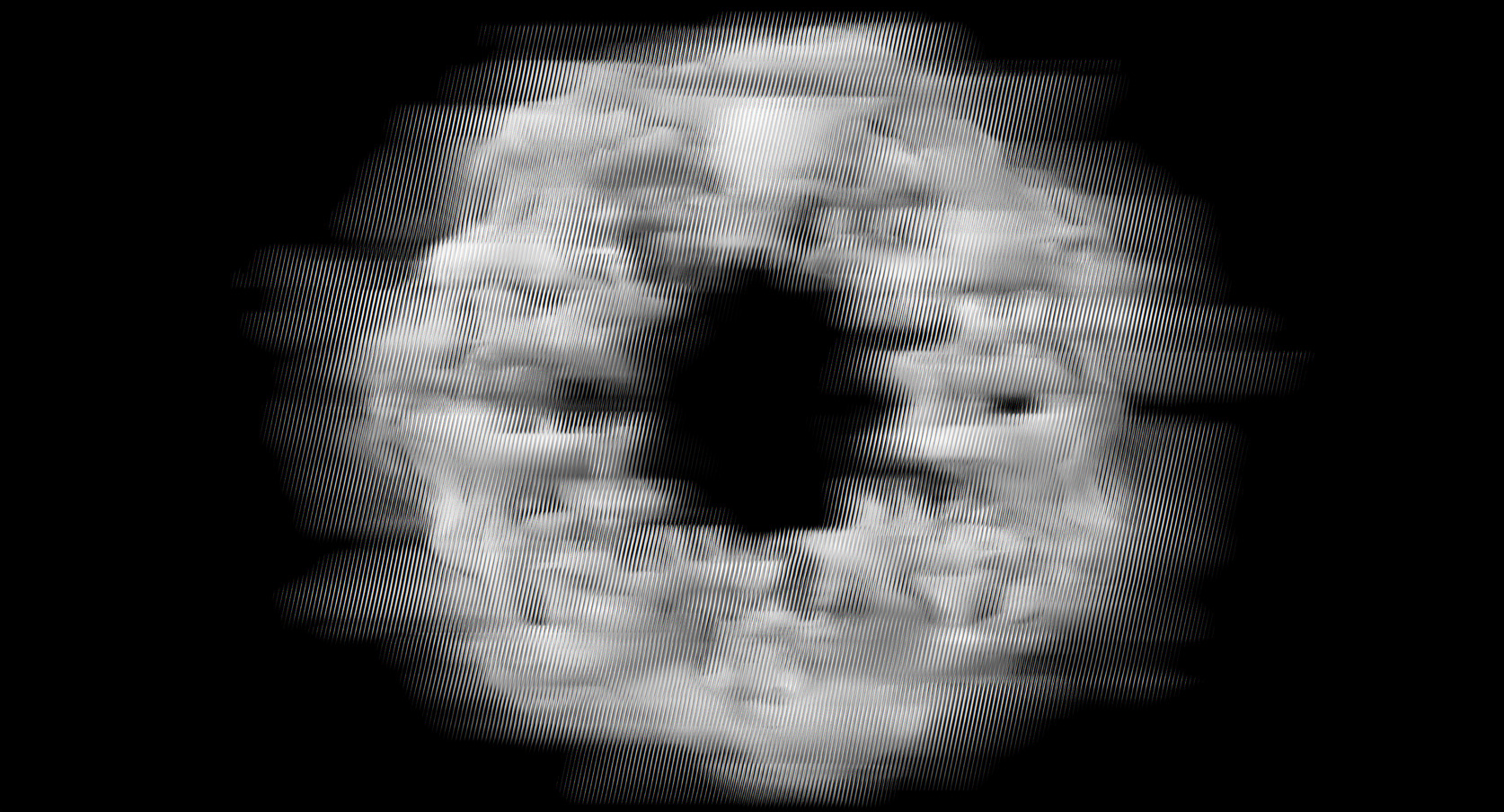

Image sent to LookingGlass display panel encodes 45 images in strips that are refracted by an optical lenticular grating in a horizontal fan of 45 different directions. |

Resolution. For 8.9" display the approximate resolution is 355 by 256 pixels. This resolution results from the lenticular grating of the device having 355 strips each of which refracts the 45 viewpoint images in different directions. The 2560 x 1600 pixels of the underlying device screen have to be shared by the 45 viewpoint images ((355 x 256) * 45 = 2560 x 1600). This resolution is about 7 times lower along x and y dimensions than conventional 2D displays because of the need to project 45 images in different directions. Although I have not tried the larger LookingGlass displays, the two larger models also use a fan of 45 images. The expected resolution of the 15.6" model would be 572 x 322 (= 3840/n x 2160/n, where n = sqrt(45)), and the 32" model would be 1145 x 644 (= 7680/n x 4320/n, where n = sqrt(45)).

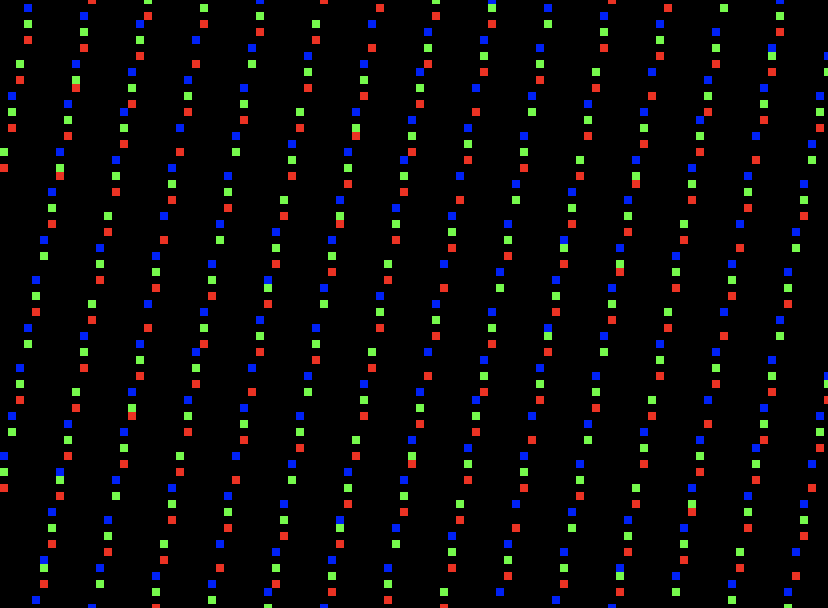

| Closeup, pixelation is isotropic. |

Horizontally blurred white atom in front of midplane. |

Zoomed in view of pixels on LookingGlass panel used to present one view direction (centermost view, tile 22). Note the green pixels form tilted squares for near isotropic sampling. Full panel image. |

Lenticular sampling pattern. The Looking Glass display uses a neat trick to partition the 45 images across the underlying display panel so that horizontal and vertical resolutions are about the same. A simple lenticular implementation with the lenticular strips vertically oriented and aligned with the pixels would have a horizontal resolution of only 56 pixels = 2560 / 45 and a vertical resolution of 1600 pixels, as each column of pixels would belong to one of the 45 images. But the Looking Glass rotates the lenticular strips by 10.3 degrees to the right. A strip of the lenticular lense is only about 7 pixels wide and all 45 images appear in that strip by using subpixel positions 0.155 (= 7/45) pixels apart. Due to the rotation a vertical column of pixels progress across the lenticular strip in subpixel steps allowing the 1600 pixel vertical panel resolution to sample each of the 45 images at vertical spacings of about 6 pixels (= 45/7). The image below shows the pattern of panel pixels used to sample one of the 45 view images (tile 22, the central one). Red, green, and blue samples are taken at different positions because the red, green, blue emitters of the panel are physically side-by-side with small subpixel spacing in the underlying panel.

Smoothing. The Looking Glass presents about 5 different neighboring view directions to one eye. This has the nice effect that moving the head side to side reveals no abrupt jumps where the scene transitions from one view direction image to the next since always 5 are being blended. This effect is a natural consequence of the rotated lenticular strips which place 45 view directions in lenticular strips only 7 pixels wide. Imagining 45 parallel lines equally spaced within the lenticular strip, each individual pixel belongs to a single viewpoint image but crosses about 6 (= 45 / 7) of these lines and so is refracted in 6 of the 45 directions. Because the panel pixels have unilluminated gaps between them it is probably closer to 4-5 directions that an image is cast in.

Depth and blur. I'd estimate the usable depth is about half the width of the display. Any amount of depth can be shown but objects far from the mid-depth plane will appear blurred. This is another consequence of each eye seeing 5 different neighboring view directions. If the object is at mid-depth those different view directions align and show nearly the same appearance. But away from mid-depth parallax causes the different view images to shift horizontally and the 5 images combine to smear horizontally as shown in the image above. ChimeraX puts the center of the bounding box of displayed models at the mid-depth plane. To move the models further back or forward to focus on features not at the box center use the mouse scroll-wheel with the shift key held, or bind the "lookingglass depth" mouse mode to a mouse button of your choice (e.g. command "mouse shift right lookingglass"). Thanks to Tom Skillman for suggesting this focus control.

Brightness. The LookingGlass display is dimmer than a conventional display, but usually is not a problem. I noticed it only in a brightly lit room lit by daylight through windows. A Mac laptop display next to the LookingGlass was significantly brighter. The dimmer appearance is because each eye is only seeing about 10% = (5 viewpoints / 45 viewpoints) of the underlying panel pixels. The underlying display is probably very bright to compensate to provide a bright enough 3D image with only 10% of the pixels.

Rendering speed. Models don't render as fast as on conventional display since 45 different views are rendered for each change in scene, so naively it would result in 45 times slower framerate. ChimeraX lighting modes (soft and full) that cast ambient shadows (64 directions) significantly slow the rendering. A 20,000 atom PDB model shown as spheres and sticks for ligands renders at 2 frames per second with full lighting and 8 frames per second with simple lighting (no ambient shadows). A small molecule (remdesivir, 77 atoms) renders at 30 frames/second. A 1923 cryoEM map (EMDB 30210) surface renders at 30 frames per second with simple lighting, 15 frames per second with full lighting. These times are on a MacBook Pro 2019 with Radeon Pro Vega 20 graphics (speed equivalent to Nvidia GTX 1050).

Rendering details. ChimeraX LookingGlass rendering is using the recommended rendering sizes of 819 x 455 pixels per view tiled in 9 rows and 5 columns of a 4096 by 4096 texture. This is about 4 times the number of pixels of the underlying 8.9" LookingGlass panel so it is a bit wasteful of GPU resources. But the main overhead in ChimeraX is very likely not the number of pixels (ie fill rate), but setting up 45 different renderings in Python each requiring 10-50 OpenGL calls. All 45 images are rendered directly to the 4K x 4K texture. Fancy GPU techniques developed for VR allow rendering multiple view directions in one pass that could speed up rendering, but ChimeraX does not have the code to do that.

Use on Windows. There were problems with the computer recognizing the LookingGlass screen on Windows 10. ChimeraX is not able to reliably determine which connected display is the LookingGlass. The screen names seen by the Qt 5.12.7 window toolkit are DISPLAY1, DISPLAY4, .... If there is only one secondary display the ChimeraX lookingglass command will assume it is the LookingGlass.

Linux: Problems connecting the LookingGlass. On Linux (Ubuntu 18.04) the operating system frequently did not see the LookingGlass display at all, web searches suggest it is a problem seeing HDMI connected secondary displays. Rebooting the computer sometimes saw the LookingGlass and made it the primary display making it difficult to log on since the resolution is barely adequate to see the mouse pointer and inadequate to read any text. Hardware was an Intel NUC 5i5RYK. By booting with only the LookingGlass display, then connecting the normal display via DisplayPort I was able to get both displays connected. Like Windows, there is no reliable way for ChimeraX to know which connected display is the LookingGlass, with Qt display names given as dp-1, hdmi-1 and such. As with Windows the ChimeraX lookingglass command will simply assume a single secondary display must be the LookingGlass.

macOS Catalina: Problems connecting the LookingGlass. A MacBook Pro (2019) often failed to see the connected LookingGlass display. Some hours of debugging found a reliable way to connect it and suggested what the problem is. This laptop has only USBC ports, so an adapter has to be used for both the USBA and HDMI LookingGlass connections. I initially used my standard adapter, an Elando Model C805 which has 3 USBA and an HDMI jack through a single USBC connection to the laptop. If I plugged the Looking class USBA and HDMI into this adapter and then plugged the adpater into the laptop it almost always failed to see the display, unconnecting and reconnecting the HDMI did not fix it, nor did restarting the LookingGlass service. But using a different adapter (QGeeM model 6543782745, USBC to HDMI) for the HDMI worked. First plug in the USBA via Elando then HDMI via QGeeM adapter. Later I found that plugging in the USBA to Elando first, then Elando into laptop, then the HDMI into the Elando also works. The 8.9" display is powered by USB. It appears that when both the USBA and HDMI are simultaneously plugged into the Elando then connected to the laptop USBC, the LookingGlass may be in the middle of powering up when the HDMI connection is being established causing it to fail. This adapter has always worked with other HDMI displays but they were not powered by the adapter.

Secondary screen. Unfortunately LookingGlass has fallen into the same pitfall as early VR such as Oculus Rift developer kit 1. They make the Looking Glass device appear as a display to the Windows, macOS, Linux operating systems and cause no end of problems for users and developers. The problem, just like for VR headset is that this is not a usable display for normal computer use, text is unreadable. As mentioned above Linux decided the LookingGlass was the primary display. LookingGlass setup instructions have many details aimed specifically at troubleshooting this poor design decision. For instance, the user is expected to switch the display so it does not mirror the primary display (it did mirror by default on Mac). The user is supposed arrange the display position. The recommended position to the left of the main display on macOS put my Mac Dock on the LookingGlass unusable so I ignored the recommendation. On Windows the LookingGlass Library application which manages on the LookingGlass applications could not figure out which display the LookingGlass was, each showing a dialog asking which display was the LookingGlass the menu choice always set to the wrong display. The experience of the LookingGlass as a second display is abysmal, as it was with early VR implementations. Fortunately VR figured out the right solution where the VR headset is not a second display, but is directly detected by the device driver (called "direct mode" in early VR). No doubt this is harder for LookingGlass to implement than the current scheme. But the current usability is too poor to be a viable consumer product.

HoloPlayCore SDK issues. The HoloPlayCore SDK also follows the same mistakes of early VR making it many times harder for potential developers to use the device. For ChimeraX it took just a few hours writing code to render the quilt image of 45 views. Rendering different camera views is a standard capability of any 3D application. At that point I would expect to hand that texture to the SDK and it takes care of all implementation details of rendering it to the device. That is how current VR SDKs (OpenVR, Oculus) work. But I then spent about 4 times as long doing a poor job of the implementation details HoloPlayCore should do. First I have to find the display. No good solution on Windows or Linux, only macOS provided the LookingGlass display name. Then I have to create a full-screen OpenGL rendering surface on that display, several hours and some gross hacks to get Qt 5.12 window toolkit to reliably do that on Windows and Linux (macOS worked easily). Then I have to setup and run the HoloPlayCore graphics shader in this secondary application window. It requires a dozen device parameters that I read from the HoloPlayCore SDK to set the shader parameters. Although ChimeraX has some capability to simultaneously render multiple windows (otherwise this would have turned into a week of work), having the application developer run the nitty gritty device rendering code is senseless. All of these steps of finding the device, creating full screen window, compiling and running shader code has to be done by every application, and is considerably harder than just rendering quilt images. Early VR (Oculus DK1) also took this shortcut of putting all the low level device handling onto the application developer. It is understandable that LookingGlass is a tiny company and making a proper SDK takes significant work. When VR switched to "direct mode" rather than treating the device as a display the SDK also took over all the connection and rendering duties. If LookingGlass is not able to do this, I think it is unlikely the product will survive because little useful software will be developed for it. HoloPlayCore is no doubt a fringe API with almost all development on Unity or Unreal. I did not check if those SDKs have application code integrate their shaders, target display, and window creation.

Available LookingGlass applications. While the LookingGlass has potential for biology researchers looking at 3D data, the most discouraging sign was the paucity of existing applications of any value. Another molecular biology visualization application PyMol (also developed originally at UC San Francisco, now developed by Schrodinger) has support in a "incentive" paid version. I attempted to use the 3D Model Importer application. It was said to available through the LookingGlass "Library" application (bad name), but I was not able to find it after extensive searching. The "Library" application has about 50 gimmick demos, after trying about 10 of these demos and not seeing a glimpse of something useful for more than a 30 second laugh, I was discouraged. I hope there are some useful applications for the device. LookingGlass needs to direct users and developers to the best software instead of this "Library" junk collection.

ChimeraX LookingGlass code. The ChimeraX LookingGlass code is entirely in Python and an OpenGL shader. It uses Python ctypes to access the HoloplayCore C API to obtain OpenGL shader parameters for rendering in file holoplay.py (336 lines). ChimeraX command, quilt rendering camera, window creation and focus mouse mode are in lookingglass.py (460 lines).

Leap Motion hand tracking input. ChimeraX can use Leap Motion hand tracking to move models, tug on atoms and emulate any of dozens of mouse modes or VR modes.